As one of the foremost advocates of exceptional home entertainment audio, Xperi and DTS recognize that a major thrill of the movie-watching experience is hearing the soundtrack the way it was intended by the filmmaker and at cinema-level quality. The dynamic range possible in a cinema auditorium lets us experience music and sound effects at volumes far surpassing the dialogue level.

While dramatic loudness variations are expected, and part of the appeal of a movie or TV soundtrack, even when adjusted for a home viewing environment, they can be problematic when watching on a TV — especially at night when neighbors can hear through walls or when children are sleeping upstairs. In these scenarios, loud music and sound effects often prompt us to constantly adjust the volume. As a result, we frequently find ourselves reaching for the remote to turn the volume down during intense scenes, only to turn it back up when dialogue resumes. Additionally, dialogue can be obscured by overlapping music and sound effects, making it challenging to hear clearly, regardless of how much we adjust the volume.

How is dialogue intelligibility typically addressed?

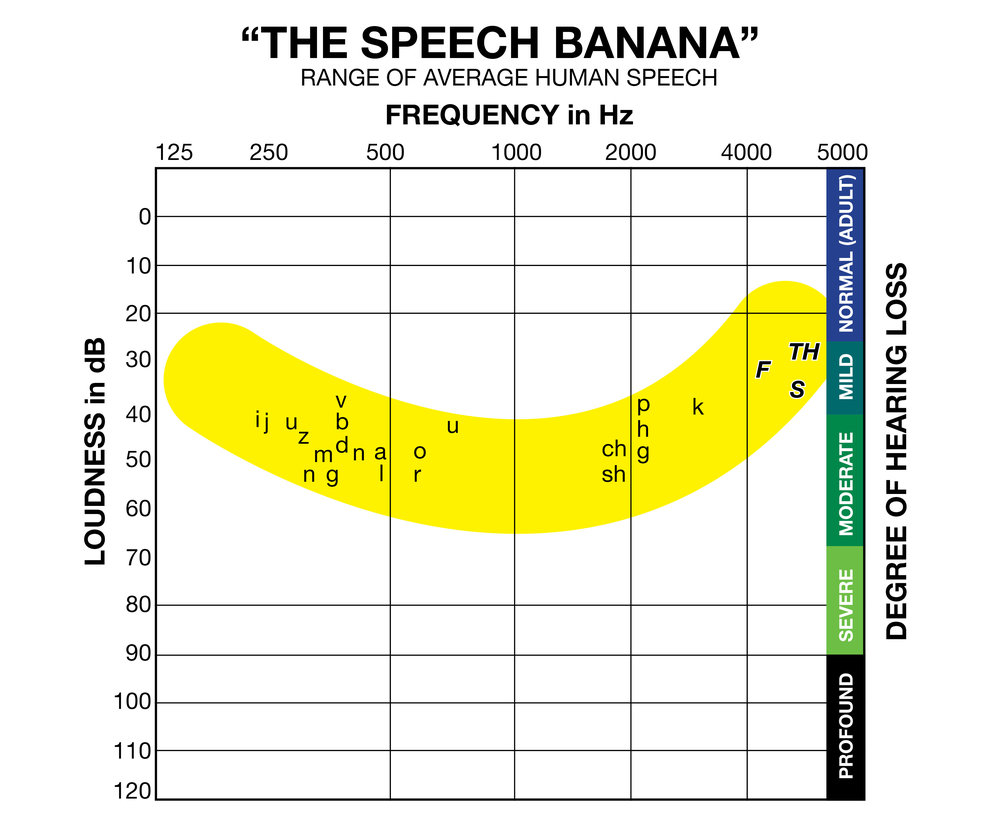

Many playback devices use dynamic range compression algorithms to manage loudness. These algorithms analyze the audio mix and automatically lower the volume when it becomes too loud. While effective in many cases, poorly tuned algorithms can cause audible ‘pumping’ effects if they’re not well-calibrated to the content’s dynamics. Some TVs also feature “dialogue enhancement” modes, which boost frequencies where speech typically occurs — usually between 125Hz and 5000Hz, as depicted in the “speech banana” plot below.

Source: Speech Banana on Wikipedia

Speech equalization filters can enhance speech clarity by boosting these dominant frequencies and compensating for age-related hearing loss. However, this method can also affect non-speech elements, potentially creating an imbalance in the soundtrack, especially when music and effects are present. Boosting frequencies shared by both dialogue and background elements can exacerbate issues where dialogue is masked by background music.

Another solution being explored is creating special mixes tailored for home viewing, such as Amazon’s Dialogue Boost. This feature offers alternative dialogue-focused mixes for Amazon Prime Video content, though it’s currently available only in English for a limited selection of programs. Other broadcasters and streaming services are considering similar solutions.

One limitation of alternative dialogue mixes is that content providers lack information about individual viewers’ listening environments, preferences or hearing abilities. As a result, they can only make general adjustments to enhance dialogue for a broad audience. Ideally, a soundtrack would be customized to individual preferences, physiology and listening environments. Achieving this would require content providers to store and distribute separate dialogue, music and sound effects tracks — a challenging task given the storage and copyright complexities involved.

Advancements in machine learning techniques offer a potential solution. Unlike traditional digital signal processing (DSP) algorithms, machine learning models can learn to “unmix” audio by identifying acoustic feature patterns. These models can be trained to separate audio tracks into dialogue, music and effects (DME) if provided with clean examples of these tracks. However, training such models with every possible soundtrack and its DME counterpart is impractical due to their limited availability and data and copyright constraints.

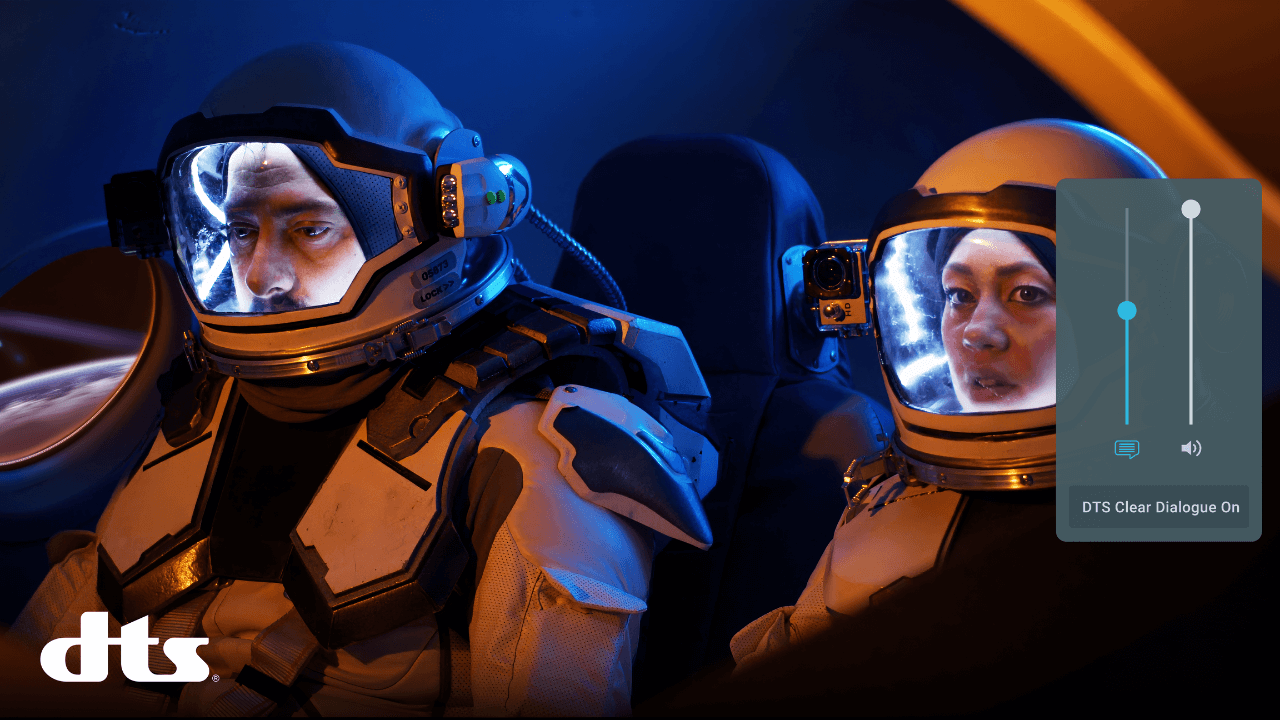

Introducing DTS Clear Dialogue

To overcome these limitations, Xperi and DTS use an optimized machine learning network trained with a diverse dataset to generalize across various scenarios. We also generate synthetic training data using audio libraries representing samples of typical soundtrack elements. This approach allows the network to learn from a wide range of audio examples while minimizing copyright issues. The trained model is then tested against real-world content to identify and address performance gaps, continually refining the model with additional data and scenarios.

After extensive development and leveraging decades of audio mixing and signal processing expertise, we are excited to introduce DTS Clear Dialogue. This feature enhances dialogue intelligibility for any content source, from traditional TV and movies to IPTV and user-generated content. DTS Clear Dialogue performs two main functions. It applies traditional DSP techniques to reduce the dynamic range, making loud effects quieter and soft dialogue clearer. Additionally, using machine learning techniques, it can separate dialogue from the rest of the soundtrack for independent processing, preserving the original intent of the filmmaker while improving clarity for the end user’s specific preferences. The technology supports various control methods, including presets, sliders and even an extra control for dialogue level on the remote-control device.

The launch of DTS Clear Dialogue sets the stage for creating better sound experiences for everyone at home. We’re continuously looking at ways to measure and reduce the cognitive load associated with poor dialogue intelligibility, including for neurodiverse individuals and those with a wide range of hearing impairments. This launch is just the kick-off point as we continue to refine our models and methodologies, we continue to focus on additional audio source separation applications, further broadening the potential for machine learning in audio processing, solving our problem statement of “What did they just say?!”

To learn more about DTS Clear Dialogue, click here.